#include <itkFiniteDifferenceGradientDescentOptimizer.h>

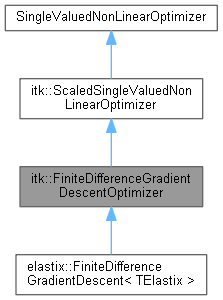

An optimizer based on gradient descent ...

If

![\[ x(k+1)_j = x(k)_j - a(k) \left[ C(x(k)_j + c(k)) - C(x(k)_j - c(k)) \right] / 2c(k), \]](form_118.png)

for all parameters

From this equation it is clear that it a gradient descent optimizer, using a finite difference approximation of the gradient.

The gain

![\[ a(k) = a / (A + k + 1)^{\alpha}. \]](form_120.png)

The perturbation size

![\[ c(k) = c / (k + 1)^{\gamma}. \]](form_121.png)

Note the similarities to the SimultaneousPerturbation optimizer and the StandardGradientDescent optimizer.

Definition at line 55 of file itkFiniteDifferenceGradientDescentOptimizer.h.

Public Types | |

| using | ConstPointer = SmartPointer<const Self> |

| using | Pointer = SmartPointer<Self> |

| using | Self = FiniteDifferenceGradientDescentOptimizer |

| enum | StopConditionType { MaximumNumberOfIterations , MetricError } |

| using | Superclass = ScaledSingleValuedNonLinearOptimizer |

Public Types inherited from itk::ScaledSingleValuedNonLinearOptimizer Public Types inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| using | ConstPointer = SmartPointer<const Self> |

| using | Pointer = SmartPointer<Self> |

| using | ScaledCostFunctionPointer = ScaledCostFunctionType::Pointer |

| using | ScaledCostFunctionType = ScaledSingleValuedCostFunction |

| using | ScalesType = NonLinearOptimizer::ScalesType |

| using | Self = ScaledSingleValuedNonLinearOptimizer |

| using | Superclass = SingleValuedNonLinearOptimizer |

Public Member Functions | |

| virtual void | AdvanceOneStep () |

| virtual void | ComputeCurrentValueOff () |

| virtual void | ComputeCurrentValueOn () |

| virtual const char * | GetClassName () const |

| virtual bool | GetComputeCurrentValue () const |

| virtual unsigned long | GetCurrentIteration () const |

| virtual double | GetGradientMagnitude () const |

| virtual double | GetLearningRate () const |

| virtual unsigned long | GetNumberOfIterations () const |

| virtual double | GetParam_A () const |

| virtual double | GetParam_a () const |

| virtual double | GetParam_alpha () const |

| virtual double | GetParam_c () const |

| virtual double | GetParam_gamma () const |

| virtual StopConditionType | GetStopCondition () const |

| virtual double | GetValue () const |

| ITK_DISALLOW_COPY_AND_MOVE (FiniteDifferenceGradientDescentOptimizer) | |

| void | ResumeOptimization () |

| virtual void | SetComputeCurrentValue (bool _arg) |

| virtual void | SetNumberOfIterations (unsigned long _arg) |

| virtual void | SetParam_A (double _arg) |

| virtual void | SetParam_a (double _arg) |

| virtual void | SetParam_alpha (double _arg) |

| virtual void | SetParam_c (double _arg) |

| virtual void | SetParam_gamma (double _arg) |

| void | StartOptimization () override |

| void | StopOptimization () |

Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| const ParametersType & | GetCurrentPosition () const override |

| virtual bool | GetMaximize () const |

| virtual const ScaledCostFunctionType * | GetScaledCostFunction () |

| virtual const ParametersType & | GetScaledCurrentPosition () |

| bool | GetUseScales () const |

| virtual void | InitializeScales () |

| ITK_DISALLOW_COPY_AND_MOVE (ScaledSingleValuedNonLinearOptimizer) | |

| virtual void | MaximizeOff () |

| virtual void | MaximizeOn () |

| void | SetCostFunction (CostFunctionType *costFunction) override |

| virtual void | SetMaximize (bool _arg) |

| virtual void | SetUseScales (bool arg) |

Static Public Member Functions | |

| static Pointer | New () |

Static Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer Static Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| static Pointer | New () |

Protected Member Functions | |

| virtual double | Compute_a (unsigned long k) const |

| virtual double | Compute_c (unsigned long k) const |

| FiniteDifferenceGradientDescentOptimizer () | |

| void | PrintSelf (std::ostream &os, Indent indent) const override |

| ~FiniteDifferenceGradientDescentOptimizer () override=default | |

Protected Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer Protected Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| virtual void | GetScaledDerivative (const ParametersType ¶meters, DerivativeType &derivative) const |

| virtual MeasureType | GetScaledValue (const ParametersType ¶meters) const |

| virtual void | GetScaledValueAndDerivative (const ParametersType ¶meters, MeasureType &value, DerivativeType &derivative) const |

| void | PrintSelf (std::ostream &os, Indent indent) const override |

| ScaledSingleValuedNonLinearOptimizer () | |

| void | SetCurrentPosition (const ParametersType ¶m) override |

| virtual void | SetScaledCurrentPosition (const ParametersType ¶meters) |

| ~ScaledSingleValuedNonLinearOptimizer () override=default | |

Protected Attributes | |

| bool | m_ComputeCurrentValue { false } |

| DerivativeType | m_Gradient {} |

| double | m_GradientMagnitude { 0.0 } |

| double | m_LearningRate { 0.0 } |

Protected Attributes inherited from itk::ScaledSingleValuedNonLinearOptimizer Protected Attributes inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| ScaledCostFunctionPointer | m_ScaledCostFunction {} |

| ParametersType | m_ScaledCurrentPosition {} |

Private Attributes | |

| unsigned long | m_CurrentIteration { 0 } |

| unsigned long | m_NumberOfIterations { 100 } |

| double | m_Param_A { 1.0 } |

| double | m_Param_a { 1.0 } |

| double | m_Param_alpha { 0.602 } |

| double | m_Param_c { 1.0 } |

| double | m_Param_gamma { 0.101 } |

| bool | m_Stop { false } |

| StopConditionType | m_StopCondition { MaximumNumberOfIterations } |

| double | m_Value { 0.0 } |

| using itk::FiniteDifferenceGradientDescentOptimizer::ConstPointer = SmartPointer<const Self> |

Definition at line 64 of file itkFiniteDifferenceGradientDescentOptimizer.h.

| using itk::FiniteDifferenceGradientDescentOptimizer::Pointer = SmartPointer<Self> |

Definition at line 63 of file itkFiniteDifferenceGradientDescentOptimizer.h.

| using itk::FiniteDifferenceGradientDescentOptimizer::Self = FiniteDifferenceGradientDescentOptimizer |

Standard class typedefs.

Definition at line 61 of file itkFiniteDifferenceGradientDescentOptimizer.h.

| using itk::FiniteDifferenceGradientDescentOptimizer::Superclass = ScaledSingleValuedNonLinearOptimizer |

Definition at line 62 of file itkFiniteDifferenceGradientDescentOptimizer.h.

Codes of stopping conditions

| Enumerator | |

|---|---|

| MaximumNumberOfIterations | |

| MetricError | |

Definition at line 73 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

protected |

|

overrideprotecteddefault |

|

virtual |

Advance one step following the gradient direction.

|

protectedvirtual |

|

protectedvirtual |

|

virtual |

|

virtual |

|

virtual |

Run-time type information (and related methods).

Reimplemented from itk::ScaledSingleValuedNonLinearOptimizer.

Reimplemented in elastix::FiniteDifferenceGradientDescent< TElastix >.

|

virtual |

|

virtual |

Get the current iteration number.

|

virtual |

Get the CurrentStepLength, GradientMagnitude and LearningRate (a_k)

|

virtual |

|

virtual |

Get the number of iterations.

|

virtual |

|

virtual |

|

virtual |

|

virtual |

|

virtual |

|

virtual |

Get Stop condition.

|

virtual |

Get the current value.

| itk::FiniteDifferenceGradientDescentOptimizer::ITK_DISALLOW_COPY_AND_MOVE | ( | FiniteDifferenceGradientDescentOptimizer | ) |

|

static |

Method for creation through the object factory.

|

overrideprotected |

PrintSelf method.

| void itk::FiniteDifferenceGradientDescentOptimizer::ResumeOptimization | ( | ) |

Resume previously stopped optimization with current parameters

|

virtual |

|

virtual |

Set the number of iterations.

|

virtual |

Set/Get A.

|

virtual |

Set/Get a.

|

virtual |

Set/Get alpha.

|

virtual |

Set/Get c.

|

virtual |

Set/Get gamma.

|

override |

Start optimization.

| void itk::FiniteDifferenceGradientDescentOptimizer::StopOptimization | ( | ) |

Stop optimization.

|

protected |

Boolean that says if the current value of the metric has to be computed. This is not necessary for optimisation; just nice for progress information.

Definition at line 158 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

private |

Definition at line 173 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

protected |

Definition at line 149 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

protected |

Definition at line 151 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

protected |

Definition at line 150 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

private |

Definition at line 172 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

private |

Definition at line 178 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

private |

Parameters, as described by Spall.

Definition at line 176 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

private |

Definition at line 179 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

private |

Definition at line 177 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

private |

Definition at line 180 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

private |

Private member variables.

Definition at line 169 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

private |

Definition at line 171 of file itkFiniteDifferenceGradientDescentOptimizer.h.

|

private |

Definition at line 170 of file itkFiniteDifferenceGradientDescentOptimizer.h.

Generated on 2024-07-17

for elastix by  1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d) 1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d) |