#include <itkStochasticGradientDescentOptimizer.h>

Implement a gradient descent optimizer.

StochasticGradientDescentOptimizer implements a simple gradient descent optimizer. At each iteration the current position is updated according to

![\[

p_{n+1} = p_n

+ \mbox{learningRate}

\, \frac{\partial f(p_n) }{\partial p_n}

\]](form_131.png)

The learning rate is a fixed scalar defined via SetLearningRate(). The optimizer steps through a user defined number of iterations; no convergence checking is done.

Additionally, user can scale each component of the

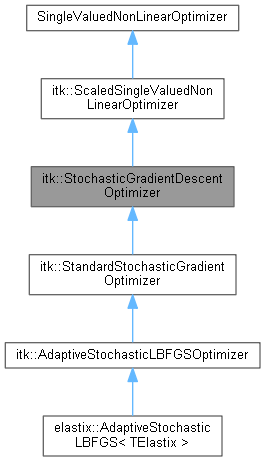

The difference of this class with the itk::GradientDescentOptimizer is that it's based on the ScaledSingleValuedNonLinearOptimizer

Definition at line 53 of file itkStochasticGradientDescentOptimizer.h.

Data Structures | |

| struct | MultiThreaderParameterType |

Public Types | |

| using | ConstPointer = SmartPointer<const Self> |

| using | Pointer = SmartPointer<Self> |

| using | ScaledCostFunctionPointer |

| using | ScaledCostFunctionType |

| using | ScalesType |

| using | Self = StochasticGradientDescentOptimizer |

| enum | StopConditionType { MaximumNumberOfIterations , MetricError , MinimumStepSize , InvalidDiagonalMatrix , GradientMagnitudeTolerance , LineSearchError } |

| using | Superclass = ScaledSingleValuedNonLinearOptimizer |

Public Types inherited from itk::ScaledSingleValuedNonLinearOptimizer Public Types inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| using | ConstPointer = SmartPointer<const Self> |

| using | Pointer = SmartPointer<Self> |

| using | ScaledCostFunctionPointer = ScaledCostFunctionType::Pointer |

| using | ScaledCostFunctionType = ScaledSingleValuedCostFunction |

| using | ScalesType = NonLinearOptimizer::ScalesType |

| using | Self = ScaledSingleValuedNonLinearOptimizer |

| using | Superclass = SingleValuedNonLinearOptimizer |

Public Member Functions | |

| virtual void | AdvanceOneStep () |

| virtual const char * | GetClassName () const |

| virtual unsigned int | GetCurrentInnerIteration () const |

| virtual unsigned int | GetCurrentIteration () const |

| virtual const DerivativeType & | GetGradient () |

| virtual unsigned int | GetLBFGSMemory () const |

| virtual const double & | GetLearningRate () |

| virtual const unsigned long & | GetNumberOfInnerIterations () |

| virtual const unsigned long & | GetNumberOfIterations () |

| virtual const DerivativeType & | GetPreviousGradient () |

| virtual const ParametersType & | GetPreviousPosition () |

| virtual const DerivativeType & | GetSearchDir () |

| virtual const StopConditionType & | GetStopCondition () |

| virtual const double & | GetValue () |

| ITK_DISALLOW_COPY_AND_MOVE (StochasticGradientDescentOptimizer) | |

| virtual void | MetricErrorResponse (ExceptionObject &err) |

| virtual void | ResumeOptimization () |

| virtual void | SetLearningRate (double _arg) |

| virtual void | SetNumberOfIterations (unsigned long _arg) |

| void | SetNumberOfWorkUnits (ThreadIdType numberOfThreads) |

| virtual void | SetPreviousGradient (DerivativeType _arg) |

| virtual void | SetPreviousPosition (ParametersType _arg) |

| virtual void | SetUseEigen (bool _arg) |

| virtual void | SetUseMultiThread (bool _arg) |

| void | StartOptimization () override |

| virtual void | StopOptimization () |

Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| const ParametersType & | GetCurrentPosition () const override |

| virtual bool | GetMaximize () const |

| virtual const ScaledCostFunctionType * | GetScaledCostFunction () |

| virtual const ParametersType & | GetScaledCurrentPosition () |

| bool | GetUseScales () const |

| virtual void | InitializeScales () |

| ITK_DISALLOW_COPY_AND_MOVE (ScaledSingleValuedNonLinearOptimizer) | |

| virtual void | MaximizeOff () |

| virtual void | MaximizeOn () |

| void | SetCostFunction (CostFunctionType *costFunction) override |

| virtual void | SetMaximize (bool _arg) |

| virtual void | SetUseScales (bool arg) |

Static Public Member Functions | |

| static Pointer | New () |

Static Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer Static Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| static Pointer | New () |

Protected Types | |

| using | ThreadInfoType = MultiThreaderBase::WorkUnitInfo |

Protected Member Functions | |

| void | PrintSelf (std::ostream &os, Indent indent) const override |

| StochasticGradientDescentOptimizer () | |

| ~StochasticGradientDescentOptimizer () override=default | |

Protected Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer Protected Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| virtual void | GetScaledDerivative (const ParametersType ¶meters, DerivativeType &derivative) const |

| virtual MeasureType | GetScaledValue (const ParametersType ¶meters) const |

| virtual void | GetScaledValueAndDerivative (const ParametersType ¶meters, MeasureType &value, DerivativeType &derivative) const |

| void | PrintSelf (std::ostream &os, Indent indent) const override |

| ScaledSingleValuedNonLinearOptimizer () | |

| void | SetCurrentPosition (const ParametersType ¶m) override |

| virtual void | SetScaledCurrentPosition (const ParametersType ¶meters) |

| ~ScaledSingleValuedNonLinearOptimizer () override=default | |

Protected Attributes | |

| unsigned long | m_CurrentInnerIteration {} |

| unsigned long | m_CurrentIteration { 0 } |

| DerivativeType | m_Gradient {} |

| unsigned long | m_LBFGSMemory { 0 } |

| double | m_LearningRate { 1.0 } |

| ParametersType | m_MeanSearchDir {} |

| unsigned long | m_NumberOfInnerIterations {} |

| unsigned long | m_NumberOfIterations { 100 } |

| DerivativeType | m_PrePreviousGradient {} |

| ParametersType | m_PrePreviousSearchDir {} |

| DerivativeType | m_PreviousGradient {} |

| ParametersType | m_PreviousPosition {} |

| ParametersType | m_PreviousSearchDir {} |

| ParametersType | m_SearchDir {} |

| bool | m_Stop { false } |

| StopConditionType | m_StopCondition { MaximumNumberOfIterations } |

| MultiThreaderBase::Pointer | m_Threader { MultiThreaderBase::New() } |

| double | m_Value { 0.0 } |

Protected Attributes inherited from itk::ScaledSingleValuedNonLinearOptimizer Protected Attributes inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| ScaledCostFunctionPointer | m_ScaledCostFunction {} |

| ParametersType | m_ScaledCurrentPosition {} |

Private Member Functions | |

| void | ThreadedAdvanceOneStep (ThreadIdType threadId, ParametersType &newPosition) |

Static Private Member Functions | |

| static ITK_THREAD_RETURN_FUNCTION_CALL_CONVENTION | AdvanceOneStepThreaderCallback (void *arg) |

Private Attributes | |

| bool | m_UseEigen { false } |

| bool | m_UseMultiThread { false } |

| using itk::StochasticGradientDescentOptimizer::ConstPointer = SmartPointer<const Self> |

Definition at line 62 of file itkStochasticGradientDescentOptimizer.h.

| using itk::StochasticGradientDescentOptimizer::Pointer = SmartPointer<Self> |

Definition at line 61 of file itkStochasticGradientDescentOptimizer.h.

Definition at line 87 of file itkScaledSingleValuedNonLinearOptimizer.h.

Definition at line 86 of file itkScaledSingleValuedNonLinearOptimizer.h.

Definition at line 85 of file itkScaledSingleValuedNonLinearOptimizer.h.

Standard class typedefs.

Definition at line 59 of file itkStochasticGradientDescentOptimizer.h.

Definition at line 60 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Typedef for multi-threading.

Definition at line 179 of file itkStochasticGradientDescentOptimizer.h.

Codes of stopping conditions The MinimumStepSize stopcondition never occurs, but may be implemented in inheriting classes

| Enumerator | |

|---|---|

| MaximumNumberOfIterations | |

| MetricError | |

| MinimumStepSize | |

| InvalidDiagonalMatrix | |

| GradientMagnitudeTolerance | |

| LineSearchError | |

Definition at line 82 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

|

overrideprotecteddefault |

|

virtual |

Advance one step following the gradient direction.

Reimplemented in elastix::AdaptiveStochasticLBFGS< TElastix >, and itk::StandardStochasticGradientOptimizer.

|

staticprivate |

The callback function.

|

virtual |

Run-time type information (and related methods).

Reimplemented from itk::ScaledSingleValuedNonLinearOptimizer.

Reimplemented in elastix::AdaptiveStochasticLBFGS< TElastix >, itk::AdaptiveStochasticLBFGSOptimizer, and itk::StandardStochasticGradientOptimizer.

|

virtual |

Get the current inner iteration number.

|

virtual |

Get the current iteration number.

|

virtual |

Get current gradient.

|

virtual |

Get the inner LBFGSMemory.

|

virtual |

Get the learning rate.

|

virtual |

Get the number of inner loop iterations.

|

virtual |

Get the number of iterations.

|

virtual |

Get the Previous gradient.

|

virtual |

Get the Previous Position.

|

virtual |

Get current search direction.

|

virtual |

Get Stop condition.

|

virtual |

Get the current value.

| itk::StochasticGradientDescentOptimizer::ITK_DISALLOW_COPY_AND_MOVE | ( | StochasticGradientDescentOptimizer | ) |

|

virtual |

Stop optimisation and pass on exception.

|

static |

Method for creation through the object factory.

|

overrideprotected |

|

virtual |

Resume previously stopped optimization with current parameters

Reimplemented in elastix::AdaptiveStochasticLBFGS< TElastix >.

|

virtual |

Set the learning rate.

|

virtual |

Set the number of iterations.

|

inline |

Set the number of threads.

Definition at line 164 of file itkStochasticGradientDescentOptimizer.h.

|

virtual |

Set the Previous gradient.

|

virtual |

Set the Previous Position.

|

virtual |

|

virtual |

|

override |

Start optimization.

|

virtual |

Stop optimization.

Reimplemented in elastix::AdaptiveStochasticLBFGS< TElastix >.

|

inlineprivate |

The threaded implementation of AdvanceOneStep().

|

protected |

Definition at line 199 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 198 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 183 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 200 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 188 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 187 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 197 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 196 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 191 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 186 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 190 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 192 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 185 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 184 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 195 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 189 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 193 of file itkStochasticGradientDescentOptimizer.h.

|

private |

Definition at line 211 of file itkStochasticGradientDescentOptimizer.h.

|

private |

Definition at line 204 of file itkStochasticGradientDescentOptimizer.h.

|

protected |

Definition at line 182 of file itkStochasticGradientDescentOptimizer.h.

Generated on 2024-07-17

for elastix by  1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d) 1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d) |