#include <elxAdaGrad.h>

A gradient descent optimizer with an adaptive gain.

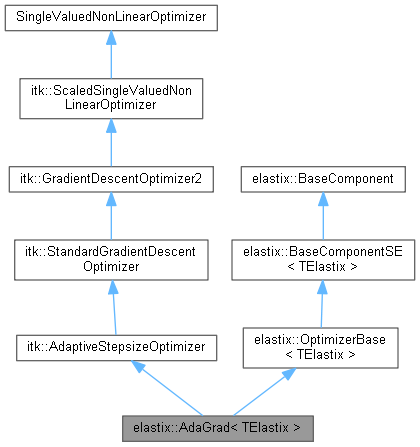

This class is a wrap around the AdaptiveStepsizeOptimizer class. It takes care of setting parameters and printing progress information. For more information about the optimization method, please read the documentation of the AdaptiveStepsizeOptimizer class.

This optimizer is very suitable to be used in combination with the Random image sampler, or with the RandomCoordinate image sampler, with the setting (NewSamplesEveryIteration "true"). Much effort has been spent on providing reasonable default values for all parameters, to simplify usage. In most registration problems, good results should be obtained without specifying any of the parameters described below (except the first of course, which defines the optimizer to use).

This optimization method is described in the following references:

[1] P. Cruz, "Almost sure convergence and asymptotical normality of a generalization of Kesten's stochastic approximation algorithm for multidimensional case." Technical Report, 2005. http://hdl.handle.net/2052/74

[2] S. Klein, J.P.W. Pluim, and M. Staring, M.A. Viergever, "Adaptive stochastic gradient descent optimization for image registration," International Journal of Computer Vision, vol. 81, no. 3, pp. 227-239, 2009. http://dx.doi.org/10.1007/s11263-008-0168-y

Acceleration in case of many transform parameters was proposed in the following paper:

[3] Y. Qiao, B. van Lew, B.P.F. Lelieveldt and M. Staring "Fast Automatic Step Size Estimation for Gradient Descent Optimization of Image Registration," IEEE Transactions on Medical Imaging, vol. 35, no. 2, pp. 391 - 403, February 2016. http://elastix.dev/marius/publications/2016_j_TMIa.php

The parameters used in this class are:

Optimizer: Select this optimizer as follows:

(Optimizer "AdaGrad")

MaximumNumberOfIterations: The maximum number of iterations in each resolution.

example: (MaximumNumberOfIterations 100 100 50)

Default/recommended value: 500. When you are in a hurry, you may go down to 250 for example. When you have plenty of time, and want to be absolutely sure of the best results, a setting of 2000 is reasonable. In general, 500 gives satisfactory results.

MaximumNumberOfSamplingAttempts: The maximum number of sampling attempts. Sometimes not enough corresponding samples can be drawn, upon which an exception is thrown. With this parameter it is possible to try to draw another set of samples.

example: (MaximumNumberOfSamplingAttempts 10 15 10)

Default value: 0, i.e. just fail immediately, for backward compatibility.

AutomaticParameterEstimation: When this parameter is set to "true", many other parameters are calculated automatically: SP_a, SP_alpha, SigmoidMax, SigmoidMin, and SigmoidScale. In the elastix.log file the actually chosen values for these parameters can be found.

example: (AutomaticParameterEstimation "true")

Default/recommended value: "true". The parameter can be specified for each resolution, or for all resolutions at once.

StepSizeStrategy: When this parameter is set to "true", the adaptive step size mechanism described in the documentation of itk::itkAdaptiveStepsizeOptimizer is used. The parameter can be specified for each resolution, or for all resolutions at once.

example: (StepSizeStrategy "Adaptive")

Default/recommend value: "Adaptive", because it makes the registration more robust. In case of using a RandomCoordinate sampler, with (UseRandomSampleRegion "true"), the adaptive step size mechanism is turned off, no matter the user setting.

MaximumStepLength: Also called

example: (MaximumStepLength 1.0)

Default: mean voxel spacing of fixed and moving image. This seems to work well in general. This parameter only has influence when AutomaticParameterEstimation is used.

SP_a: The gain

SP_a can be defined for each resolution.

example: (SP_a 3200.0 3200.0 1600.0)

The default value is 400.0. Tuning this variable for you specific problem is recommended. Alternatively set the AutomaticParameterEstimation to "true". In that case, you do not need to specify SP_a. SP_a has no influence when AutomaticParameterEstimation is used.

SP_A: The gain

SP_A can be defined for each resolution.

example: (SP_A 50.0 50.0 100.0)

The default/recommended value for this particular optimizer is 20.0.

SP_alpha: The gain

SP_alpha can be defined for each resolution.

example: (SP_alpha 0.602 0.602 0.602)

The default/recommended value for this particular optimizer is 1.0. Alternatively set the AutomaticParameterEstimation to "true". In that case, you do not need to specify SP_alpha. SP_alpha has no influence when AutomaticParameterEstimation is used.

SigmoidMax: The maximum of the sigmoid function (

example: (SigmoidMax 1.0)

Default/recommended value: 1.0. This parameter has no influence when AutomaticParameterEstimation is used. In that case, always a value 1.0 is used.

SigmoidMin: The minimum of the sigmoid function (

example: (SigmoidMin -0.8)

Default value: -0.8. This parameter has no influence when AutomaticParameterEstimation is used. In that case, the value is automatically determined, depending on the images, metric etc.

SigmoidScale: The scale/width of the sigmoid function (

example: (SigmoidScale 0.00001)

Default value: 1e-8. This parameter has no influence when AutomaticParameterEstimation is used. In that case, the value is automatically determined, depending on the images, metric etc.

SigmoidInitialTime: the initial time input for the sigmoid (

example: (SigmoidInitialTime 0.0 5.0 5.0)

Default value: 0.0. When increased, the optimization starts with smaller steps, leaving the possibility to increase the steps when necessary. If set to 0.0, the method starts with with the largest step allowed.

NumberOfGradientMeasurements: Number of gradients N to estimate the average square magnitudes of the exact gradient and the approximation error. The parameter can be specified for each resolution, or for all resolutions at once.

example: (NumberOfGradientMeasurements 10)

Default value: 0, which means that the value is automatically estimated. In principle, the more the better, but the slower. In practice N=10 is usually sufficient. But the automatic estimation achieved by N=0 also works good. The parameter has only influence when AutomaticParameterEstimation is used.

NumberOfJacobianMeasurements: The number of voxels M where the Jacobian is measured, which is used to estimate the covariance matrix. The parameter can be specified for each resolution, or for all resolutions at once.

example: (NumberOfJacobianMeasurements 5000 10000 20000)

Default value: M = max( 1000, nrofparams ), with nrofparams the number of transform parameters. This is a rather crude rule of thumb, which seems to work in practice. In principle, the more the better, but the slower. The parameter has only influence when AutomaticParameterEstimation is used.

NumberOfSamplesForNoiseCompensationFactor: The number of image samples used to compute the 'exact' gradient. The samples are chosen on a uniform grid. The parameter can be specified for each resolution, or for all resolutions at once.

example: (NumberOfSamplesForNoiseCompensationFactor 100000)

Default/recommended: 100000. This works in general. If the image is smaller, the number of samples is automatically reduced. In principle, the more the better, but the slower. The parameter has only influence when AutomaticParameterEstimation is used.

m_NumberOfSamplesForPrecondition: The number of image samples used to compute the gradient for preconditioner. The samples are chosen on a random sampler. The parameter can be specified for each resolution, or for all resolutions at once.

example: (NumberOfSamplesForPrecondition 500000)

Default/recommended: 500000. This works in general. If the image is smaller, the number of samples is automatically reduced. In principle, the more the better, but the slower. The parameter has only influence when AutomaticParameterEstimation is used.

RegularizationKappa: Selects for the preconditioner regularization. The parameter can be specified for each resolution, or for all resolutions at once.

example: (RegularizationKappa 0.9)

Definition at line 190 of file elxAdaGrad.h.

Static Public Member Functions | |

| static Pointer | New () |

Static Public Member Functions inherited from itk::AdaptiveStepsizeOptimizer Static Public Member Functions inherited from itk::AdaptiveStepsizeOptimizer | |

| static Pointer | New () |

Static Public Member Functions inherited from itk::StandardGradientDescentOptimizer Static Public Member Functions inherited from itk::StandardGradientDescentOptimizer | |

| static Pointer | New () |

Static Public Member Functions inherited from itk::GradientDescentOptimizer2 Static Public Member Functions inherited from itk::GradientDescentOptimizer2 | |

| static Pointer | New () |

Static Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer Static Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| static Pointer | New () |

Static Public Member Functions inherited from elastix::BaseComponent Static Public Member Functions inherited from elastix::BaseComponent | |

| template<typename TBaseComponent > | |

| static auto | AsITKBaseType (TBaseComponent *const baseComponent) -> decltype(baseComponent->GetAsITKBaseType()) |

| static void | InitializeElastixExecutable () |

| static bool | IsElastixLibrary () |

Protected Member Functions | |

| AdaGrad () | |

| virtual void | AddRandomPerturbation (ParametersType ¶meters, double sigma) |

| virtual void | AutomaticPreconditionerEstimation () |

| virtual void | GetScaledDerivativeWithExceptionHandling (const ParametersType ¶meters, DerivativeType &derivative) |

| itkStaticConstMacro (FixedImageDimension, unsigned int, FixedImageType::ImageDimension) | |

| itkStaticConstMacro (MovingImageDimension, unsigned int, MovingImageType::ImageDimension) | |

| virtual void | SampleGradients (const ParametersType &mu0, double perturbationSigma, double &gg, double &ee) |

| ~AdaGrad () override=default | |

Protected Member Functions inherited from itk::AdaptiveStepsizeOptimizer Protected Member Functions inherited from itk::AdaptiveStepsizeOptimizer | |

| AdaptiveStepsizeOptimizer () | |

| void | UpdateCurrentTime () override |

| ~AdaptiveStepsizeOptimizer () override=default | |

Protected Member Functions inherited from itk::StandardGradientDescentOptimizer Protected Member Functions inherited from itk::StandardGradientDescentOptimizer | |

| virtual double | Compute_a (double k) const |

| StandardGradientDescentOptimizer () | |

| ~StandardGradientDescentOptimizer () override=default | |

Protected Member Functions inherited from itk::GradientDescentOptimizer2 Protected Member Functions inherited from itk::GradientDescentOptimizer2 | |

| GradientDescentOptimizer2 () | |

| void | PrintSelf (std::ostream &os, Indent indent) const override |

| ~GradientDescentOptimizer2 () override=default | |

Protected Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer Protected Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| virtual void | GetScaledDerivative (const ParametersType ¶meters, DerivativeType &derivative) const |

| virtual MeasureType | GetScaledValue (const ParametersType ¶meters) const |

| virtual void | GetScaledValueAndDerivative (const ParametersType ¶meters, MeasureType &value, DerivativeType &derivative) const |

| void | PrintSelf (std::ostream &os, Indent indent) const override |

| ScaledSingleValuedNonLinearOptimizer () | |

| void | SetCurrentPosition (const ParametersType ¶m) override |

| virtual void | SetScaledCurrentPosition (const ParametersType ¶meters) |

| ~ScaledSingleValuedNonLinearOptimizer () override=default | |

Protected Member Functions inherited from elastix::OptimizerBase< TElastix > Protected Member Functions inherited from elastix::OptimizerBase< TElastix > | |

| virtual bool | GetNewSamplesEveryIteration () const |

| OptimizerBase ()=default | |

| virtual void | SelectNewSamples () |

| ~OptimizerBase () override=default | |

Protected Member Functions inherited from elastix::BaseComponentSE< TElastix > Protected Member Functions inherited from elastix::BaseComponentSE< TElastix > | |

| BaseComponentSE ()=default | |

| ~BaseComponentSE () override=default | |

Protected Member Functions inherited from elastix::BaseComponent Protected Member Functions inherited from elastix::BaseComponent | |

| BaseComponent ()=default | |

| virtual | ~BaseComponent ()=default |

Additional Inherited Members | |

Static Protected Member Functions inherited from elastix::OptimizerBase< TElastix > Static Protected Member Functions inherited from elastix::OptimizerBase< TElastix > | |

| static void | PrintSettingsVector (const SettingsVectorType &settings) |

|

protected |

Definition at line 348 of file elxAdaGrad.h.

|

protected |

Definition at line 345 of file elxAdaGrad.h.

|

protected |

Definition at line 343 of file elxAdaGrad.h.

|

protected |

Definition at line 350 of file elxAdaGrad.h.

|

protected |

Definition at line 316 of file elxAdaGrad.h.

| using elastix::AdaGrad< TElastix >::ConstPointer = itk::SmartPointer<const Self> |

Definition at line 202 of file elxAdaGrad.h.

|

protected |

Definition at line 341 of file elxAdaGrad.h.

|

protected |

Definition at line 302 of file elxAdaGrad.h.

|

protected |

Definition at line 303 of file elxAdaGrad.h.

|

protected |

Definition at line 301 of file elxAdaGrad.h.

|

protected |

Protected typedefs

Definition at line 298 of file elxAdaGrad.h.

|

protected |

Definition at line 329 of file elxAdaGrad.h.

|

protected |

Definition at line 328 of file elxAdaGrad.h.

|

protected |

Definition at line 325 of file elxAdaGrad.h.

|

protected |

Definition at line 324 of file elxAdaGrad.h.

|

protected |

Definition at line 323 of file elxAdaGrad.h.

|

protected |

Definition at line 322 of file elxAdaGrad.h.

|

protected |

Definition at line 327 of file elxAdaGrad.h.

|

protected |

Definition at line 326 of file elxAdaGrad.h.

|

protected |

Definition at line 331 of file elxAdaGrad.h.

|

protected |

Definition at line 330 of file elxAdaGrad.h.

|

protected |

Definition at line 321 of file elxAdaGrad.h.

|

protected |

Samplers:

Definition at line 320 of file elxAdaGrad.h.

| using elastix::AdaGrad< TElastix >::ITKBaseType = typename Superclass2::ITKBaseType |

Definition at line 224 of file elxAdaGrad.h.

|

protected |

Definition at line 304 of file elxAdaGrad.h.

|

protected |

Definition at line 306 of file elxAdaGrad.h.

|

protected |

Definition at line 307 of file elxAdaGrad.h.

|

protected |

Definition at line 299 of file elxAdaGrad.h.

|

protected |

Definition at line 346 of file elxAdaGrad.h.

|

protected |

Definition at line 310 of file elxAdaGrad.h.

| using elastix::AdaGrad< TElastix >::Pointer = itk::SmartPointer<Self> |

Definition at line 201 of file elxAdaGrad.h.

|

protected |

Definition at line 314 of file elxAdaGrad.h.

|

protected |

Definition at line 312 of file elxAdaGrad.h.

|

protected |

Definition at line 335 of file elxAdaGrad.h.

|

protected |

Other protected typedefs

Definition at line 334 of file elxAdaGrad.h.

| using elastix::AdaGrad< TElastix >::Self = AdaGrad |

Standard ITK.

Definition at line 198 of file elxAdaGrad.h.

|

protected |

Definition at line 133 of file elxOptimizerBase.h.

| using elastix::AdaGrad< TElastix >::SizeValueType = itk::SizeValueType |

Definition at line 225 of file elxAdaGrad.h.

| using elastix::AdaGrad< TElastix >::Superclass1 = AdaptiveStepsizeOptimizer |

Definition at line 199 of file elxAdaGrad.h.

| using elastix::AdaGrad< TElastix >::Superclass2 = OptimizerBase<TElastix> |

Definition at line 200 of file elxAdaGrad.h.

|

protected |

Typedefs for support of sparse Jacobians and AdvancedTransforms.

Definition at line 338 of file elxAdaGrad.h.

|

protected |

Definition at line 305 of file elxAdaGrad.h.

|

protected |

|

overrideprotecteddefault |

|

protectedvirtual |

Helper function that adds a random perturbation delta to the input parameters, with delta ~ sigma * N(0,I). Used by SampleGradients.

|

overridevirtual |

Advance one step following the gradient direction.

Reimplemented from itk::GradientDescentOptimizer2.

|

overridevirtual |

Reimplemented from elastix::BaseComponent.

|

overridevirtual |

Reimplemented from elastix::BaseComponent.

|

overridevirtual |

Reimplemented from elastix::BaseComponent.

|

protectedvirtual |

Select different method to estimate some reasonable values for the parameters SP_a, SP_alpha (=1), SigmoidMin, SigmoidMax (=1), and SigmoidScale.

|

overridevirtual |

Reimplemented from elastix::BaseComponent.

|

overridevirtual |

Methods invoked by elastix, in which parameters can be set and progress information can be printed.

Reimplemented from elastix::BaseComponent.

| elastix::AdaGrad< TElastix >::elxClassNameMacro | ( | "AdaGrad< TElastix >" | ) |

Name of this class. Use this name in the parameter file to select this specific optimizer. example: (Optimizer "AdaGrad")

|

virtual |

|

virtual |

Run-time type information (and related methods).

Reimplemented from itk::AdaptiveStepsizeOptimizer.

|

virtual |

|

virtual |

|

virtual |

|

protectedvirtual |

Helper function, which calls GetScaledValueAndDerivative and does some exception handling. Used by SampleGradients.

| elastix::AdaGrad< TElastix >::ITK_DISALLOW_COPY_AND_MOVE | ( | AdaGrad< TElastix > | ) |

|

protected |

|

protected |

|

override |

Stop optimization and pass on exception.

|

static |

Method for creation through the object factory.

|

overridevirtual |

If automatic gain estimation is desired, then estimate SP_a, SP_alpha SigmoidScale, SigmoidMax, SigmoidMin. After that call Superclass' implementation.

Reimplemented from itk::GradientDescentOptimizer2.

|

protectedvirtual |

Measure some derivatives, exact and approximated. Returns the squared magnitude of the gradient and approximation error. Needed for the automatic parameter estimation. Gradients are measured at position mu_n, which are generated according to: mu_n - mu_0 ~ N(0, perturbationSigma^2 I ); gg = g^T g, etc.

|

virtual |

Set/Get whether automatic parameter estimation is desired. If true, make sure to set the maximum step length.

The following parameters are automatically determined: SP_a, SP_alpha (=1), SigmoidMin, SigmoidMax (=1), SigmoidScale. A usually suitable value for SP_A is 20, which is the default setting, if not specified by the user.

|

virtual |

Set/Get the MaximumNumberOfSamplingAttempts.

|

virtual |

Set/Get maximum step length.

|

virtual |

Set/Get regularization value kappa.

|

override |

Check if any scales are set, and set the UseScales flag on or off; after that call the superclass' implementation.

|

private |

Definition at line 404 of file elxAdaGrad.h.

|

protected |

The transform stored as AdvancedTransform

Definition at line 363 of file elxAdaGrad.h.

|

private |

Definition at line 406 of file elxAdaGrad.h.

|

private |

Definition at line 414 of file elxAdaGrad.h.

|

protected |

Definition at line 372 of file elxAdaGrad.h.

|

private |

Definition at line 412 of file elxAdaGrad.h.

|

protected |

Definition at line 370 of file elxAdaGrad.h.

|

private |

Private variables for band size estimation of covariance matrix.

Definition at line 417 of file elxAdaGrad.h.

|

private |

Private variables for the sampling attempts.

Definition at line 411 of file elxAdaGrad.h.

|

private |

Definition at line 407 of file elxAdaGrad.h.

|

private |

Definition at line 408 of file elxAdaGrad.h.

|

protected |

Definition at line 369 of file elxAdaGrad.h.

|

private |

Definition at line 418 of file elxAdaGrad.h.

|

protected |

Some options for automatic parameter estimation.

Definition at line 356 of file elxAdaGrad.h.

|

protected |

Definition at line 357 of file elxAdaGrad.h.

|

protected |

Definition at line 358 of file elxAdaGrad.h.

|

protected |

Definition at line 359 of file elxAdaGrad.h.

|

protected |

Definition at line 360 of file elxAdaGrad.h.

|

private |

Definition at line 422 of file elxAdaGrad.h.

|

private |

Definition at line 413 of file elxAdaGrad.h.

|

protected |

RandomGenerator for AddRandomPerturbation.

Definition at line 366 of file elxAdaGrad.h.

|

protected |

Definition at line 371 of file elxAdaGrad.h.

|

protected |

Variable to store the automatically determined settings for each resolution.

Definition at line 353 of file elxAdaGrad.h.

|

protected |

Definition at line 368 of file elxAdaGrad.h.

|

private |

The flag of using noise compensation.

Definition at line 421 of file elxAdaGrad.h.

Generated on 2024-07-17

for elastix by  1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d) 1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d) |