#include <itkStandardGradientDescentOptimizer.h>

This class implements a gradient descent optimizer with a decaying gain.

If

![\[ x(k+1) = x(k) - a(k) dC/dx \]](form_78.png)

The gain

![\[ a(k) = a / (A + k + 1)^\alpha \]](form_130.png)

.

It is very suitable to be used in combination with a stochastic estimate of the gradient

NewSamplesEveryIteration to "true" to achieve this effect. For more information on this strategy, you may have a look at:

S. Klein, M. Staring, J.P.W. Pluim, "Comparison of gradient approximation techniques for optimisation of mutual information in nonrigid registration", in: SPIE Medical Imaging: Image Processing, Editor(s): J.M. Fitzpatrick, J.M. Reinhardt, SPIE press, 2005, vol. 5747, Proceedings of SPIE, pp. 192-203.

Or:

S. Klein, M. Staring, J.P.W. Pluim, "Evaluation of Optimization Methods for Nonrigid Medical Image Registration using Mutual Information and B-Splines" IEEE Transactions on Image Processing, 2007, nr. 16(12), December.

This class also serves as a base class for other GradientDescent type algorithms, like the AcceleratedGradientDescentOptimizer.

Definition at line 65 of file itkStandardGradientDescentOptimizer.h.

Public Member Functions | |

| void | AdvanceOneStep () override |

| virtual const char * | GetClassName () const |

| virtual double | GetCurrentTime () const |

| virtual double | GetInitialTime () const |

| virtual double | GetParam_A () const |

| virtual double | GetParam_a () const |

| virtual double | GetParam_alpha () const |

| ITK_DISALLOW_COPY_AND_MOVE (StandardGradientDescentOptimizer) | |

| virtual void | ResetCurrentTimeToInitialTime () |

| virtual void | SetInitialTime (double _arg) |

| virtual void | SetParam_A (double _arg) |

| virtual void | SetParam_a (double _arg) |

| virtual void | SetParam_alpha (double _arg) |

| void | StartOptimization () override |

Public Member Functions inherited from itk::GradientDescentOptimizer2 Public Member Functions inherited from itk::GradientDescentOptimizer2 | |

| virtual unsigned int | GetCurrentIteration () const |

| virtual const DerivativeType & | GetGradient () |

| virtual const double & | GetLearningRate () |

| virtual const unsigned long & | GetNumberOfIterations () |

| virtual const DerivativeType & | GetSearchDirection () |

| virtual const StopConditionType & | GetStopCondition () |

| virtual const double & | GetValue () |

| ITK_DISALLOW_COPY_AND_MOVE (GradientDescentOptimizer2) | |

| virtual void | MetricErrorResponse (ExceptionObject &err) |

| virtual void | ResumeOptimization () |

| virtual void | SetLearningRate (double _arg) |

| virtual void | SetNumberOfIterations (unsigned long _arg) |

| void | StartOptimization () override |

| virtual void | StopOptimization () |

Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| const ParametersType & | GetCurrentPosition () const override |

| virtual bool | GetMaximize () const |

| virtual const ScaledCostFunctionType * | GetScaledCostFunction () |

| virtual const ParametersType & | GetScaledCurrentPosition () |

| bool | GetUseScales () const |

| virtual void | InitializeScales () |

| ITK_DISALLOW_COPY_AND_MOVE (ScaledSingleValuedNonLinearOptimizer) | |

| virtual void | MaximizeOff () |

| virtual void | MaximizeOn () |

| void | SetCostFunction (CostFunctionType *costFunction) override |

| virtual void | SetMaximize (bool _arg) |

| virtual void | SetUseScales (bool arg) |

Static Public Member Functions | |

| static Pointer | New () |

Static Public Member Functions inherited from itk::GradientDescentOptimizer2 Static Public Member Functions inherited from itk::GradientDescentOptimizer2 | |

| static Pointer | New () |

Static Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer Static Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| static Pointer | New () |

Protected Member Functions | |

| virtual double | Compute_a (double k) const |

| StandardGradientDescentOptimizer () | |

| virtual void | UpdateCurrentTime () |

| ~StandardGradientDescentOptimizer () override=default | |

Protected Member Functions inherited from itk::GradientDescentOptimizer2 Protected Member Functions inherited from itk::GradientDescentOptimizer2 | |

| GradientDescentOptimizer2 () | |

| void | PrintSelf (std::ostream &os, Indent indent) const override |

| ~GradientDescentOptimizer2 () override=default | |

Protected Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer Protected Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| virtual void | GetScaledDerivative (const ParametersType ¶meters, DerivativeType &derivative) const |

| virtual MeasureType | GetScaledValue (const ParametersType ¶meters) const |

| virtual void | GetScaledValueAndDerivative (const ParametersType ¶meters, MeasureType &value, DerivativeType &derivative) const |

| void | PrintSelf (std::ostream &os, Indent indent) const override |

| ScaledSingleValuedNonLinearOptimizer () | |

| void | SetCurrentPosition (const ParametersType ¶m) override |

| virtual void | SetScaledCurrentPosition (const ParametersType ¶meters) |

| ~ScaledSingleValuedNonLinearOptimizer () override=default | |

Protected Attributes | |

| double | m_CurrentTime { 0.0 } |

| bool | m_UseConstantStep { false } |

Protected Attributes inherited from itk::GradientDescentOptimizer2 Protected Attributes inherited from itk::GradientDescentOptimizer2 | |

| DerivativeType | m_Gradient {} |

| DerivativeType | m_SearchDirection {} |

| StopConditionType | m_StopCondition { MaximumNumberOfIterations } |

Protected Attributes inherited from itk::ScaledSingleValuedNonLinearOptimizer Protected Attributes inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| ScaledCostFunctionPointer | m_ScaledCostFunction {} |

| ParametersType | m_ScaledCurrentPosition {} |

Private Attributes | |

| double | m_InitialTime { 0.0 } |

| double | m_Param_A { 1.0 } |

| double | m_Param_a { 1.0 } |

| double | m_Param_alpha { 0.602 } |

| using itk::StandardGradientDescentOptimizer::ConstPointer = SmartPointer<const Self> |

Definition at line 75 of file itkStandardGradientDescentOptimizer.h.

| using itk::StandardGradientDescentOptimizer::Pointer = SmartPointer<Self> |

Definition at line 74 of file itkStandardGradientDescentOptimizer.h.

Definition at line 78 of file itkScaledSingleValuedNonLinearOptimizer.h.

Definition at line 77 of file itkScaledSingleValuedNonLinearOptimizer.h.

Definition at line 76 of file itkScaledSingleValuedNonLinearOptimizer.h.

Standard ITK.

Definition at line 71 of file itkStandardGradientDescentOptimizer.h.

Definition at line 72 of file itkStandardGradientDescentOptimizer.h.

Codes of stopping conditions The MinimumStepSize stopcondition never occurs, but may be implemented in inheriting classes

Definition at line 83 of file itkGradientDescentOptimizer2.h.

|

protected |

|

overrideprotecteddefault |

|

overridevirtual |

Sets a new LearningRate before calling the Superclass' implementation, and updates the current time.

Reimplemented from itk::GradientDescentOptimizer2.

Function to compute the parameter at time/iteration k.

|

virtual |

Run-time type information (and related methods).

Reimplemented from itk::GradientDescentOptimizer2.

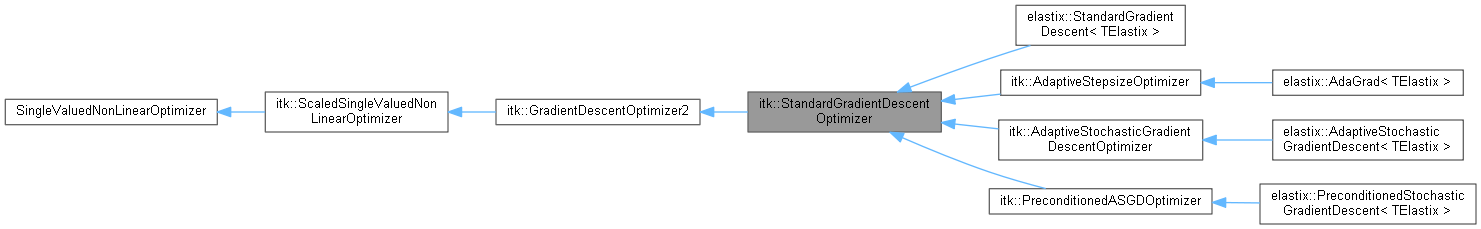

Reimplemented in elastix::AdaGrad< TElastix >, elastix::AdaptiveStochasticGradientDescent< TElastix >, elastix::PreconditionedStochasticGradientDescent< TElastix >, elastix::StandardGradientDescent< TElastix >, itk::AdaptiveStepsizeOptimizer, itk::AdaptiveStochasticGradientDescentOptimizer, and itk::PreconditionedASGDOptimizer.

|

virtual |

Get the current time. This equals the CurrentIteration in this base class but may be different in inheriting classes, such as the AccelerateGradientDescent

|

virtual |

|

virtual |

|

virtual |

|

virtual |

| itk::StandardGradientDescentOptimizer::ITK_DISALLOW_COPY_AND_MOVE | ( | StandardGradientDescentOptimizer | ) |

|

static |

Method for creation through the object factory.

|

inlinevirtual |

Set the current time to the initial time. This can be useful to 'reset' the optimisation, for example if you changed the cost function while optimisation. Be careful with this function.

Definition at line 130 of file itkStandardGradientDescentOptimizer.h.

|

virtual |

Set/Get the initial time. Should be >=0. This function is superfluous, since Param_A does effectively the same. However, in inheriting classes, like the AcceleratedGradientDescent the initial time may have a different function than Param_A. Default: 0.0

|

virtual |

Set/Get A.

|

virtual |

Set/Get a.

|

virtual |

Set/Get alpha.

|

override |

Set current time to 0 and call superclass' implementation.

|

protectedvirtual |

Function to update the current time This function just increments the CurrentTime by 1. Inheriting functions may implement something smarter, for example, dependent on the progress

Reimplemented in itk::AdaptiveStepsizeOptimizer, itk::AdaptiveStochasticGradientDescentOptimizer, and itk::PreconditionedASGDOptimizer.

|

protected |

The current time, which serves as input for Compute_a

Definition at line 152 of file itkStandardGradientDescentOptimizer.h.

|

private |

Settings

Definition at line 164 of file itkStandardGradientDescentOptimizer.h.

|

private |

Definition at line 160 of file itkStandardGradientDescentOptimizer.h.

|

private |

Parameters, as described by Spall.

Definition at line 159 of file itkStandardGradientDescentOptimizer.h.

|

private |

Definition at line 161 of file itkStandardGradientDescentOptimizer.h.

|

protected |

Constant step size or others, different value of k.

Definition at line 155 of file itkStandardGradientDescentOptimizer.h.

Generated on 2024-07-17

for elastix by  1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d) 1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d) |