#include <itkGenericConjugateGradientOptimizer.h>

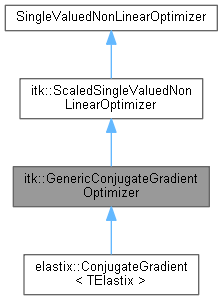

A set of conjugate gradient algorithms.

The steplength is determined at each iteration by means of a line search routine. The itk::MoreThuenteLineSearchOptimizer works well.

Definition at line 40 of file itkGenericConjugateGradientOptimizer.h.

Static Public Member Functions | |

| static Pointer | New () |

Static Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer Static Public Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| static Pointer | New () |

Protected Member Functions | |

| void | AddBetaDefinition (const BetaDefinitionType &name, ComputeBetaFunctionType function) |

| virtual double | ComputeBeta (const DerivativeType &previousGradient, const DerivativeType &gradient, const ParametersType &previousSearchDir) |

| double | ComputeBetaDY (const DerivativeType &previousGradient, const DerivativeType &gradient, const ParametersType &previousSearchDir) |

| double | ComputeBetaDYHS (const DerivativeType &previousGradient, const DerivativeType &gradient, const ParametersType &previousSearchDir) |

| double | ComputeBetaFR (const DerivativeType &previousGradient, const DerivativeType &gradient, const ParametersType &previousSearchDir) |

| double | ComputeBetaHS (const DerivativeType &previousGradient, const DerivativeType &gradient, const ParametersType &previousSearchDir) |

| double | ComputeBetaPR (const DerivativeType &previousGradient, const DerivativeType &gradient, const ParametersType &previousSearchDir) |

| double | ComputeBetaSD (const DerivativeType &previousGradient, const DerivativeType &gradient, const ParametersType &previousSearchDir) |

| virtual void | ComputeSearchDirection (const DerivativeType &previousGradient, const DerivativeType &gradient, ParametersType &searchDir) |

| GenericConjugateGradientOptimizer () | |

| virtual void | LineSearch (const ParametersType searchDir, double &step, ParametersType &x, MeasureType &f, DerivativeType &g) |

| void | PrintSelf (std::ostream &os, Indent indent) const override |

| virtual void | SetInLineSearch (bool _arg) |

| virtual bool | TestConvergence (bool firstLineSearchDone) |

| ~GenericConjugateGradientOptimizer () override=default | |

Protected Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer Protected Member Functions inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| virtual void | GetScaledDerivative (const ParametersType ¶meters, DerivativeType &derivative) const |

| virtual MeasureType | GetScaledValue (const ParametersType ¶meters) const |

| virtual void | GetScaledValueAndDerivative (const ParametersType ¶meters, MeasureType &value, DerivativeType &derivative) const |

| void | PrintSelf (std::ostream &os, Indent indent) const override |

| ScaledSingleValuedNonLinearOptimizer () | |

| void | SetCurrentPosition (const ParametersType ¶m) override |

| virtual void | SetScaledCurrentPosition (const ParametersType ¶meters) |

| ~ScaledSingleValuedNonLinearOptimizer () override=default | |

Protected Attributes | |

| BetaDefinitionType | m_BetaDefinition {} |

| BetaDefinitionMapType | m_BetaDefinitionMap {} |

| DerivativeType | m_CurrentGradient {} |

| unsigned long | m_CurrentIteration { 0 } |

| double | m_CurrentStepLength { 0.0 } |

| MeasureType | m_CurrentValue { 0.0 } |

| bool | m_InLineSearch { false } |

| bool | m_PreviousGradientAndSearchDirValid { false } |

| bool | m_Stop { false } |

| StopConditionType | m_StopCondition { StopConditionType::Unknown } |

| bool | m_UseDefaultMaxNrOfItWithoutImprovement { true } |

Protected Attributes inherited from itk::ScaledSingleValuedNonLinearOptimizer Protected Attributes inherited from itk::ScaledSingleValuedNonLinearOptimizer | |

| ScaledCostFunctionPointer | m_ScaledCostFunction {} |

| ParametersType | m_ScaledCurrentPosition {} |

Private Attributes | |

| double | m_GradientMagnitudeTolerance { 1e-5 } |

| LineSearchOptimizerPointer | m_LineSearchOptimizer { nullptr } |

| unsigned long | m_MaximumNumberOfIterations { 100 } |

| unsigned long | m_MaxNrOfItWithoutImprovement { 10 } |

| double | m_ValueTolerance { 1e-5 } |

| using itk::GenericConjugateGradientOptimizer::BetaDefinitionMapType = std::map<BetaDefinitionType, ComputeBetaFunctionType> |

Definition at line 69 of file itkGenericConjugateGradientOptimizer.h.

| using itk::GenericConjugateGradientOptimizer::BetaDefinitionType = std::string |

Definition at line 68 of file itkGenericConjugateGradientOptimizer.h.

Typedef for a function that computes

Definition at line 65 of file itkGenericConjugateGradientOptimizer.h.

| using itk::GenericConjugateGradientOptimizer::ConstPointer = SmartPointer<const Self> |

Definition at line 48 of file itkGenericConjugateGradientOptimizer.h.

| using itk::GenericConjugateGradientOptimizer::LineSearchOptimizerPointer = LineSearchOptimizerType::Pointer |

Definition at line 61 of file itkGenericConjugateGradientOptimizer.h.

Definition at line 60 of file itkGenericConjugateGradientOptimizer.h.

| using itk::GenericConjugateGradientOptimizer::Pointer = SmartPointer<Self> |

Definition at line 47 of file itkGenericConjugateGradientOptimizer.h.

Definition at line 86 of file itkScaledSingleValuedNonLinearOptimizer.h.

Definition at line 85 of file itkScaledSingleValuedNonLinearOptimizer.h.

Definition at line 45 of file itkGenericConjugateGradientOptimizer.h.

Definition at line 46 of file itkGenericConjugateGradientOptimizer.h.

|

strong |

| Enumerator | |

|---|---|

| MetricError | |

| LineSearchError | |

| MaximumNumberOfIterations | |

| GradientMagnitudeTolerance | |

| ValueTolerance | |

| InfiniteBeta | |

| Unknown | |

Definition at line 71 of file itkGenericConjugateGradientOptimizer.h.

|

protected |

|

overrideprotecteddefault |

|

protected |

Function to add a new beta definition. The first argument should be a name via which a user can select this

|

protectedvirtual |

Compute

|

protected |

"DaiYuan"

|

protected |

"DaiYuanHestenesStiefel"

|

protected |

"FletcherReeves"

|

protected |

"HestenesStiefel"

|

protected |

"PolakRibiere"

|

protected |

Different definitions of

|

protectedvirtual |

Compute the search direction:

![\[ d_{k} = - g_{k} + \beta_{k} d_{k-1} \]](form_104.png)

In the first iteration the search direction is computed as:

![\[ d_{0} = - g_{0} \]](form_105.png)

On calling, searchDir should equal

|

virtual |

|

virtual |

Reimplemented from itk::ScaledSingleValuedNonLinearOptimizer.

Reimplemented in elastix::ConjugateGradient< TElastix >.

|

virtual |

|

virtual |

Get information about optimization process:

|

virtual |

|

virtual |

|

virtual |

Setting: the mininum gradient magnitude. By default 1e-5.

The optimizer stops when:

|

virtual |

|

virtual |

Setting: the maximum number of iterations

|

virtual |

|

virtual |

|

virtual |

Setting: a stopping criterion, the value tolerance. By default 1e-5.

The optimizer stops when

![\[ 2.0 * | f_k - f_{k-1} | \le

ValueTolerance * ( |f_k| + |f_{k-1}| + 1e-20 ) \]](form_103.png)

is satisfied MaxNrOfItWithoutImprovement times in a row.

| itk::GenericConjugateGradientOptimizer::ITK_DISALLOW_COPY_AND_MOVE | ( | GenericConjugateGradientOptimizer | ) |

| itk::GenericConjugateGradientOptimizer::itkGetModifiableObjectMacro | ( | LineSearchOptimizer | , |

| LineSearchOptimizerType | ) |

|

protectedvirtual |

Perform a line search along the search direction. On calling,

|

static |

|

overrideprotected |

|

virtual |

| void itk::GenericConjugateGradientOptimizer::SetBetaDefinition | ( | const BetaDefinitionType & | arg | ) |

Setting: the definition of

|

virtual |

|

protectedvirtual |

|

virtual |

Setting: the line search optimizer

|

virtual |

|

virtual |

Setting: the maximum number of iterations in a row that satisfy the value tolerance criterion. By default (if never set) equal to the number of parameters.

|

virtual |

|

override |

|

virtual |

|

protectedvirtual |

Check if convergence has occurred; The firstLineSearchDone bool allows the implementation of TestConvergence to decide to skip a few convergence checks when no line search has performed yet (so, before the actual optimisation begins)

Reimplemented in elastix::ConjugateGradient< TElastix >.

|

protected |

The name of the BetaDefinition

Definition at line 169 of file itkGenericConjugateGradientOptimizer.h.

|

protected |

A mapping that links the names of the BetaDefinitions to functions that compute

Definition at line 173 of file itkGenericConjugateGradientOptimizer.h.

|

protected |

Definition at line 147 of file itkGenericConjugateGradientOptimizer.h.

|

protected |

Definition at line 149 of file itkGenericConjugateGradientOptimizer.h.

|

protected |

Definition at line 152 of file itkGenericConjugateGradientOptimizer.h.

|

protected |

Definition at line 148 of file itkGenericConjugateGradientOptimizer.h.

|

private |

Definition at line 259 of file itkGenericConjugateGradientOptimizer.h.

|

protected |

Is true when the LineSearchOptimizer has been started.

Definition at line 159 of file itkGenericConjugateGradientOptimizer.h.

|

private |

Definition at line 262 of file itkGenericConjugateGradientOptimizer.h.

|

private |

Definition at line 257 of file itkGenericConjugateGradientOptimizer.h.

|

private |

Definition at line 260 of file itkGenericConjugateGradientOptimizer.h.

|

protected |

Flag that says if the previous gradient and search direction are known. Typically 'true' at the start of optimization, or when a stopped optimisation is resumed (in the latter case the previous gradient and search direction may of course still be valid, but to be safe it is assumed that they are not).

Definition at line 166 of file itkGenericConjugateGradientOptimizer.h.

|

protected |

Definition at line 151 of file itkGenericConjugateGradientOptimizer.h.

|

protected |

Definition at line 150 of file itkGenericConjugateGradientOptimizer.h.

|

protected |

Flag that is true as long as the method SetMaxNrOfItWithoutImprovement is never called

Definition at line 156 of file itkGenericConjugateGradientOptimizer.h.

|

private |

Definition at line 258 of file itkGenericConjugateGradientOptimizer.h.

Generated on 2024-07-17

for elastix by  1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d) 1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d) |