#include <itkRSGDEachParameterApartBaseOptimizer.h>

An optimizer based on gradient descent...

This optimizer

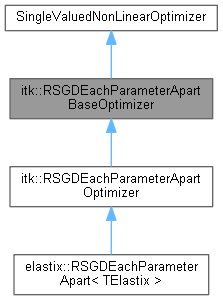

Definition at line 36 of file itkRSGDEachParameterApartBaseOptimizer.h.

◆ ConstPointer

◆ Pointer

◆ Self

◆ Superclass

◆ StopConditionType

Codes of stopping conditions.

| Enumerator |

|---|

| GradientMagnitudeTolerance | |

| StepTooSmall | |

| ImageNotAvailable | |

| SamplesNotAvailable | |

| MaximumNumberOfIterations | |

| MetricError | |

Definition at line 54 of file itkRSGDEachParameterApartBaseOptimizer.h.

◆ RSGDEachParameterApartBaseOptimizer()

| itk::RSGDEachParameterApartBaseOptimizer::RSGDEachParameterApartBaseOptimizer |

( |

| ) |

|

|

protected |

◆ ~RSGDEachParameterApartBaseOptimizer()

| itk::RSGDEachParameterApartBaseOptimizer::~RSGDEachParameterApartBaseOptimizer |

( |

| ) |

|

|

overrideprotecteddefault |

◆ AdvanceOneStep()

| virtual void itk::RSGDEachParameterApartBaseOptimizer::AdvanceOneStep |

( |

| ) |

|

|

protectedvirtual |

Advance one step following the gradient direction This method verifies if a change in direction is required and if a reduction in steplength is required.

◆ GetClassName()

| virtual const char * itk::RSGDEachParameterApartBaseOptimizer::GetClassName |

( |

| ) |

const |

|

virtual |

◆ GetCurrentIteration()

| virtual unsigned long itk::RSGDEachParameterApartBaseOptimizer::GetCurrentIteration |

( |

| ) |

const |

|

virtual |

◆ GetCurrentStepLength()

| virtual double itk::RSGDEachParameterApartBaseOptimizer::GetCurrentStepLength |

( |

| ) |

const |

|

virtual |

Get the current average step length

◆ GetCurrentStepLengths()

| virtual const DerivativeType & itk::RSGDEachParameterApartBaseOptimizer::GetCurrentStepLengths |

( |

| ) |

|

|

virtual |

Get the array of all step lengths

◆ GetGradient()

| virtual const DerivativeType & itk::RSGDEachParameterApartBaseOptimizer::GetGradient |

( |

| ) |

|

|

virtual |

◆ GetGradientMagnitude()

| virtual double itk::RSGDEachParameterApartBaseOptimizer::GetGradientMagnitude |

( |

| ) |

const |

|

virtual |

Get the current GradientMagnitude

◆ GetGradientMagnitudeTolerance()

| virtual double itk::RSGDEachParameterApartBaseOptimizer::GetGradientMagnitudeTolerance |

( |

| ) |

const |

|

virtual |

◆ GetMaximize()

| virtual bool itk::RSGDEachParameterApartBaseOptimizer::GetMaximize |

( |

| ) |

const |

|

virtual |

◆ GetMaximumStepLength()

| virtual double itk::RSGDEachParameterApartBaseOptimizer::GetMaximumStepLength |

( |

| ) |

const |

|

virtual |

◆ GetMinimize()

| bool itk::RSGDEachParameterApartBaseOptimizer::GetMinimize |

( |

| ) |

const |

|

inline |

◆ GetMinimumStepLength()

| virtual double itk::RSGDEachParameterApartBaseOptimizer::GetMinimumStepLength |

( |

| ) |

const |

|

virtual |

◆ GetNumberOfIterations()

| virtual unsigned long itk::RSGDEachParameterApartBaseOptimizer::GetNumberOfIterations |

( |

| ) |

const |

|

virtual |

◆ GetStopCondition()

| virtual StopConditionType itk::RSGDEachParameterApartBaseOptimizer::GetStopCondition |

( |

| ) |

const |

|

virtual |

◆ GetValue()

| virtual MeasureType itk::RSGDEachParameterApartBaseOptimizer::GetValue |

( |

| ) |

const |

|

virtual |

◆ ITK_DISALLOW_COPY_AND_MOVE()

◆ MaximizeOff()

| virtual void itk::RSGDEachParameterApartBaseOptimizer::MaximizeOff |

( |

| ) |

|

|

virtual |

◆ MaximizeOn()

| virtual void itk::RSGDEachParameterApartBaseOptimizer::MaximizeOn |

( |

| ) |

|

|

virtual |

◆ MinimizeOff()

| void itk::RSGDEachParameterApartBaseOptimizer::MinimizeOff |

( |

| ) |

|

|

inline |

◆ MinimizeOn()

| void itk::RSGDEachParameterApartBaseOptimizer::MinimizeOn |

( |

| ) |

|

|

inline |

◆ New()

| static Pointer itk::RSGDEachParameterApartBaseOptimizer::New |

( |

| ) |

|

|

static |

Method for creation through the object factory.

◆ PrintSelf()

| void itk::RSGDEachParameterApartBaseOptimizer::PrintSelf |

( |

std::ostream & | os, |

|

|

Indent | indent ) const |

|

overrideprotected |

◆ ResumeOptimization()

| void itk::RSGDEachParameterApartBaseOptimizer::ResumeOptimization |

( |

| ) |

|

Resume previously stopped optimization with current parameters.

- See also

- StopOptimization

◆ SetGradientMagnitudeTolerance()

| virtual void itk::RSGDEachParameterApartBaseOptimizer::SetGradientMagnitudeTolerance |

( |

double | _arg | ) |

|

|

virtual |

◆ SetMaximize()

| virtual void itk::RSGDEachParameterApartBaseOptimizer::SetMaximize |

( |

bool | _arg | ) |

|

|

virtual |

Specify whether to minimize or maximize the cost function.

◆ SetMaximumStepLength()

| virtual void itk::RSGDEachParameterApartBaseOptimizer::SetMaximumStepLength |

( |

double | _arg | ) |

|

|

virtual |

Set/Get parameters to control the optimization process.

◆ SetMinimize()

| void itk::RSGDEachParameterApartBaseOptimizer::SetMinimize |

( |

bool | v | ) |

|

|

inline |

◆ SetMinimumStepLength()

| virtual void itk::RSGDEachParameterApartBaseOptimizer::SetMinimumStepLength |

( |

double | _arg | ) |

|

|

virtual |

◆ SetNumberOfIterations()

| virtual void itk::RSGDEachParameterApartBaseOptimizer::SetNumberOfIterations |

( |

unsigned long | _arg | ) |

|

|

virtual |

◆ StartOptimization()

| void itk::RSGDEachParameterApartBaseOptimizer::StartOptimization |

( |

| ) |

|

|

override |

◆ StepAlongGradient()

| virtual void itk::RSGDEachParameterApartBaseOptimizer::StepAlongGradient |

( |

const DerivativeType & | , |

|

|

const DerivativeType & | ) |

|

inlineprotectedvirtual |

Advance one step along the corrected gradient taking into account the steplength represented by factor. This method is invoked by AdvanceOneStep. It is expected to be overrided by optimization methods in non-vector spaces

In RSGDEachParameterApart this function does not accepts a single scalar steplength factor, but an array of factors, which contains the steplength for each parameter apart.

- See also

- AdvanceOneStep

Reimplemented in itk::RSGDEachParameterApartOptimizer.

Definition at line 149 of file itkRSGDEachParameterApartBaseOptimizer.h.

◆ StopOptimization()

| void itk::RSGDEachParameterApartBaseOptimizer::StopOptimization |

( |

| ) |

|

◆ m_CurrentIteration

| unsigned long itk::RSGDEachParameterApartBaseOptimizer::m_CurrentIteration { 0 } |

|

private |

◆ m_CurrentStepLength

| double itk::RSGDEachParameterApartBaseOptimizer::m_CurrentStepLength { 0 } |

|

private |

◆ m_CurrentStepLengths

| DerivativeType itk::RSGDEachParameterApartBaseOptimizer::m_CurrentStepLengths {} |

|

private |

◆ m_Gradient

| DerivativeType itk::RSGDEachParameterApartBaseOptimizer::m_Gradient {} |

|

private |

◆ m_GradientMagnitude

| double itk::RSGDEachParameterApartBaseOptimizer::m_GradientMagnitude { 0.0 } |

|

private |

◆ m_GradientMagnitudeTolerance

| double itk::RSGDEachParameterApartBaseOptimizer::m_GradientMagnitudeTolerance { 1e-4 } |

|

private |

◆ m_Maximize

| bool itk::RSGDEachParameterApartBaseOptimizer::m_Maximize { false } |

|

private |

◆ m_MaximumStepLength

| double itk::RSGDEachParameterApartBaseOptimizer::m_MaximumStepLength { 1.0 } |

|

private |

◆ m_MinimumStepLength

| double itk::RSGDEachParameterApartBaseOptimizer::m_MinimumStepLength { 1e-3 } |

|

private |

◆ m_NumberOfIterations

| unsigned long itk::RSGDEachParameterApartBaseOptimizer::m_NumberOfIterations { 100 } |

|

private |

◆ m_PreviousGradient

| DerivativeType itk::RSGDEachParameterApartBaseOptimizer::m_PreviousGradient {} |

|

private |

◆ m_Stop

| bool itk::RSGDEachParameterApartBaseOptimizer::m_Stop { false } |

|

private |

◆ m_StopCondition

◆ m_Value

| MeasureType itk::RSGDEachParameterApartBaseOptimizer::m_Value { 0.0 } |

|

private |

1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d)

1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d)