#include <itkRSGDEachParameterApartOptimizer.h>

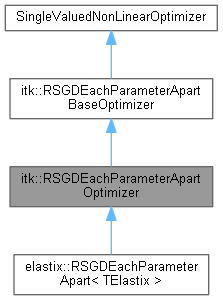

An optimizer based on gradient descent.

This class is almost a copy of the normal itk::RegularStepGradientDescentOptimizer. The difference is that each parameter has its own step length, whereas the normal RSGD has one step length that is used for all parameters.

This could cause inaccuracies, if, for example, parameter 1, 2 and 3 are already close to the optimum, but parameter 4 not yet. The average stepsize is halved then, so parameter 4 will not have time to reach its optimum (in a worst case scenario).

The RSGDEachParameterApart stops only if ALL steplenghts are smaller than the MinimumStepSize given in the parameter file!

Note that this is a quite experimental optimizer, currently only used for some specific tests.

Definition at line 53 of file itkRSGDEachParameterApartOptimizer.h.

Public Types | |

| using | ConstPointer = SmartPointer<const Self> |

| using | CostFunctionPointer = CostFunctionType::Pointer |

| using | Pointer = SmartPointer<Self> |

| using | Self = RSGDEachParameterApartOptimizer |

| using | Superclass = RSGDEachParameterApartBaseOptimizer |

Public Types inherited from itk::RSGDEachParameterApartBaseOptimizer Public Types inherited from itk::RSGDEachParameterApartBaseOptimizer | |

| using | ConstPointer = SmartPointer<const Self> |

| using | Pointer = SmartPointer<Self> |

| using | Self = RSGDEachParameterApartBaseOptimizer |

| enum | StopConditionType { GradientMagnitudeTolerance = 1 , StepTooSmall , ImageNotAvailable , SamplesNotAvailable , MaximumNumberOfIterations , MetricError } |

| using | Superclass = SingleValuedNonLinearOptimizer |

Static Public Member Functions | |

| static Pointer | New () |

Static Public Member Functions inherited from itk::RSGDEachParameterApartBaseOptimizer Static Public Member Functions inherited from itk::RSGDEachParameterApartBaseOptimizer | |

| static Pointer | New () |

Protected Member Functions | |

| RSGDEachParameterApartOptimizer ()=default | |

| void | StepAlongGradient (const DerivativeType &factor, const DerivativeType &transformedGradient) override |

| ~RSGDEachParameterApartOptimizer () override=default | |

Protected Member Functions inherited from itk::RSGDEachParameterApartBaseOptimizer Protected Member Functions inherited from itk::RSGDEachParameterApartBaseOptimizer | |

| virtual void | AdvanceOneStep () |

| void | PrintSelf (std::ostream &os, Indent indent) const override |

| RSGDEachParameterApartBaseOptimizer () | |

| ~RSGDEachParameterApartBaseOptimizer () override=default | |

| using itk::RSGDEachParameterApartOptimizer::ConstPointer = SmartPointer<const Self> |

Definition at line 62 of file itkRSGDEachParameterApartOptimizer.h.

| using itk::RSGDEachParameterApartOptimizer::CostFunctionPointer = CostFunctionType::Pointer |

Definition at line 72 of file itkRSGDEachParameterApartOptimizer.h.

| using itk::RSGDEachParameterApartOptimizer::Pointer = SmartPointer<Self> |

Definition at line 61 of file itkRSGDEachParameterApartOptimizer.h.

Standard class typedefs.

Definition at line 59 of file itkRSGDEachParameterApartOptimizer.h.

Definition at line 60 of file itkRSGDEachParameterApartOptimizer.h.

|

protecteddefault |

|

overrideprotecteddefault |

|

virtual |

Run-time type information (and related methods).

Reimplemented from itk::RSGDEachParameterApartBaseOptimizer.

Reimplemented in elastix::RSGDEachParameterApart< TElastix >.

| itk::RSGDEachParameterApartOptimizer::ITK_DISALLOW_COPY_AND_MOVE | ( | RSGDEachParameterApartOptimizer | ) |

|

static |

Method for creation through the object factory.

|

overrideprotectedvirtual |

Advance one step along the corrected gradient taking into account the steplengths represented by the factor array. This method is invoked by AdvanceOneStep. It is expected to be overrided by optimization methods in non-vector spaces

Reimplemented from itk::RSGDEachParameterApartBaseOptimizer.

Generated on 2024-07-17

for elastix by  1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d) 1.11.0 (9b424b03c9833626cd435af22a444888fbbb192d) |